Abstract

Autism Spectrum Disorder (ASD) is a neurodevelopmental disorder that affects how children communicate and relate to other people and the world around them. Emerging studies have shown that neurofeedback training (NFT) games are an effective and playful intervention to enhance social and attentional capabilities for autistic children. However, NFT is primarily available in a clinical setting that is hard to scale. Also, the intervention demands deliberately-designed gamified feedback with fun and enjoyment, where little knowledge has been acquired in the HCI community. Through a ten-month iterative design process with four domain experts, we developed Eggly, a mobile NFT game based on a consumer-grade EEG headband and a tablet. Eggly uses novel augmented reality (AR) techniques to offer engagement and personalization, enhancing their training experience. We conducted two field studies (a single-session study and a three-week multi-session study) with a total of five autistic children to assess Eggly in practice at a special education center. Both quantitative and qualitative results indicate the effectiveness of the approach as well as contribute to the design knowledge of creating mobile AR NFT games.

What is Eggly?

Eggly is a groundbreaking mobile augmented reality (AR) neurofeedback training (NFT) game designed to enhance the social and communication skills of children with Autism Spectrum Disorder (ASD). The system pairs a portable EEG headband with a tablet to gamify neurofeedback training, offering a playful yet effective intervention.

Why Eggly?

Key Challenges:

- Traditional NFT systems are costly and confined to clinical settings.

- Designing engaging, fun, and personalized games for children with ASD remains underexplored.

Solution:

Eggly bridges the gap by introducing:

- Accessibility through a consumer-grade EEG headband and mobile devices.

- Engagement with AR-based storytelling and interactive customization.

- Scientific Validity via real-time neurofeedback that strengthens mirror neuron activity.

How Does It Work?

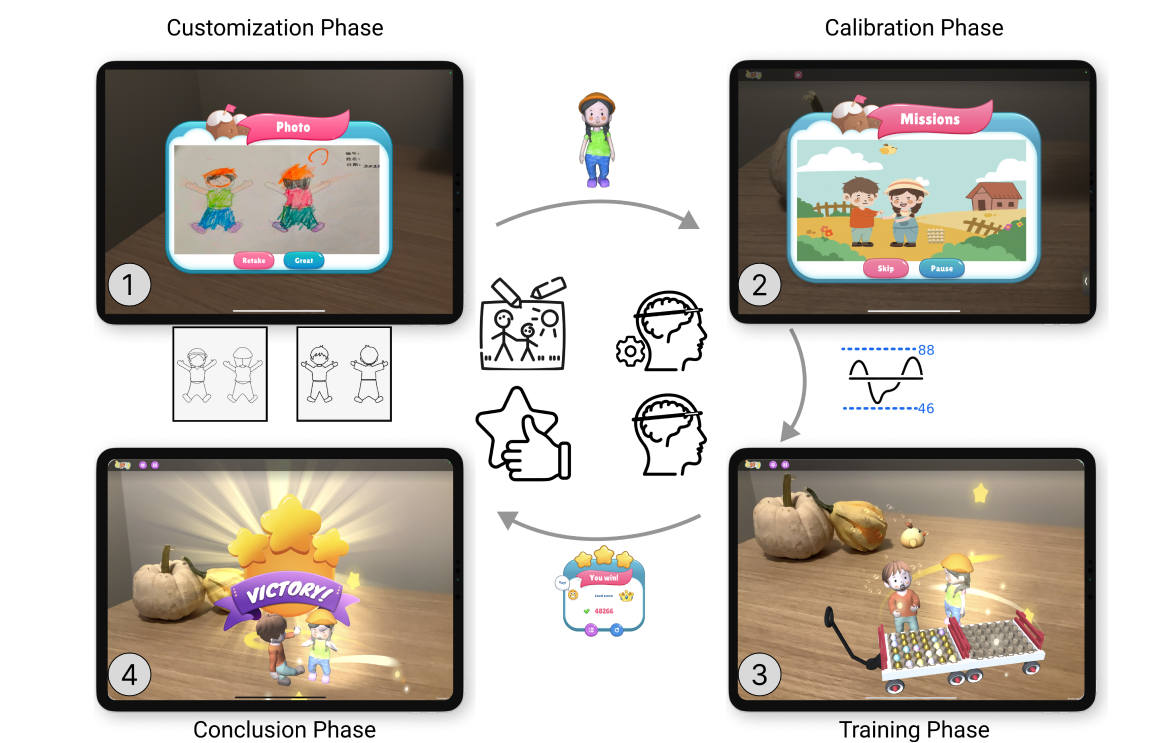

- Customization Phase: Children color and name game characters, which are then transformed into 3D avatars via AR.

- Calibration Phase: EEG data is used to set personalized thresholds for neurofeedback.

- Training Phase: Children collect eggs by focusing on social collaboration scenes, receiving gamified feedback on their performance.

- Conclusion Phase: Performance is summarized, and children celebrate achievements with rewards and animations.

Phase: An introductory video explains the game story and tasks while determining the customized thresholds. (3) Training

Phase: playing Eggly in AR to collect eggs and control the bird’s flight height using the thresholds, with performance being

logged. (4) Conclusion Phase: Upon completion of the game, a victory scene is displayed, reporting the overall performance.

Impact and Findings

Two field studies with five autistic children showed:

- Increased engagement compared to traditional 2D NFT games.

- Clear, easy-to-understand feedback mechanisms enhanced focus and self-regulation.

- Positive feedback loop boosted motivation and social interaction imitation.

Key features like AR integration and character customization made Eggly a more relatable and enjoyable experience.

Why It Matters

Eggly demonstrates that combining AR and NFT can revolutionize interventions for ASD by:

- Lowering barriers to access.

- Offering a personalized and immersive learning experience.

- Promoting real-world application of social skills.

DOI: 10.1145/3596251

@article{lyu2023eggly,

title={Eggly: Designing mobile augmented reality neurofeedback training games for children with autism spectrum disorder},

author={Lyu, Yue and An, Pengcheng and Xiao, Yage and Zhang, Zibo and Zhang, Huan and Katsuragawa, Keiko and Zhao, Jian},

journal={Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies},

volume={7},

number={2},

pages={1--29},

year={2023},

publisher={ACM New York, NY, USA}

}